Schatz Calls For AI-Generated Content To Be Clearly Labeled To Combat Misinformation, Fraud

New Schatz-Kennedy Bill Would Require Companies to Label AI-Made Content

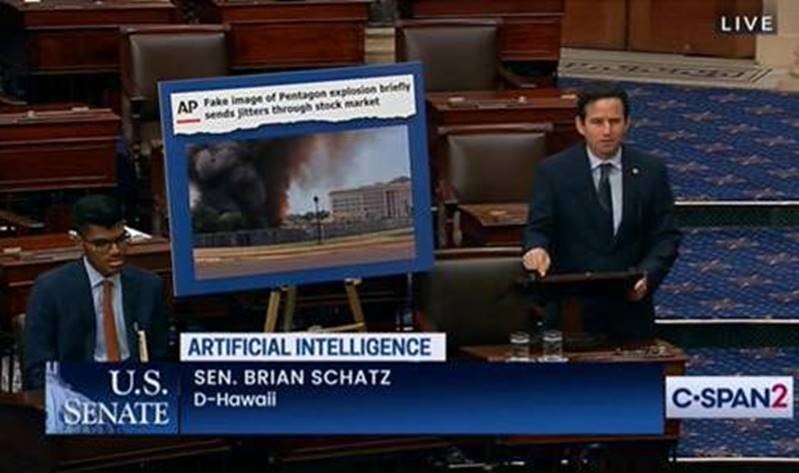

WASHINGTON – Today, U.S. Senator Brian Schatz (D-Hawai‘i) spoke on the Senate floor about the need for content generated by artificial intelligence (AI) to be labeled in an effort to prevent rampant misinformation and fraud as use of the technology increases. Schatz introduced a bipartisan bill with Senator John Kennedy (R-La.) that would require clear labels and disclosures on AI-made content and AI chatbots.

“Content made by AI should be clearly labeled as such so people know what they’re looking at. That’s exactly what the bipartisan AI Labeling Act that Senator Kennedy and I introduced calls for,” said Senator Schatz. “It puts the onus where it belongs – on companies, not consumers. Because people shouldn’t have to double and triple check, or parse through thick lines of code, to find out whether something was made by AI. It should be right there, in the open, clearly marked with a label.”

Of the challenges presented by AI-generated content, Senator Schatz added, “Deception is not new. Fraud is not new. Misinformation is not new. These are all age-old problems. What is new, though, is how quickly and easily someone can deceive or defraud – and do it at staggering scale. With powerful generative AI tools at their fingertips, all con artists need are just a few minutes to spin up a scam or a lie.”

The full text of his remarks can be found below and a video is available here.

Seeing is believing, we often say. But that's not really true anymore. Because thanks to artificial intelligence, we're increasingly encountering fake images, doctored videos, and manipulated audio. Whether we're watching TV, answering the phone, or scrolling through our social media feeds, it has become harder and harder to trust our own eyes and our own ears. The boundaries of reality are becoming blurrier every day.

We've always consumed information under the assumption that what we're seeing and hearing is coming from the source that it says it's from – and that a human has created it. It's such a basic notion and it's left unsaid and taken for granted. But right now, that assumption is under threat.

Deception is not new. Fraud is not new. Misinformation is not new. These are age-old problems, of course. What is new, though, is how quickly and easily someone can deceive or defraud – and do it at a staggering scale. With powerful generative AI tools at their fingertips, all con artists need are just a few minutes to spin up a scam or a lie.

Doctored images falsely claiming that there was an explosion at the Pentagon. Fake advertisements using the likenesses of celebrities like Tom Hanks to peddle products. Phone calls that replicate the voice of family members purporting to be kidnapped and needing money. Manipulated audio clips of elected officials saying things they did not say. These are just some of the examples of misuse we've already seen.

This is not a parade of horribles about the future of AI. These are things that already happened and we're only scratching the surface of what's possible with AI. And because the possibilities are so vast, much of it yet to be discovered, it's easy to feel overwhelmed by it all – to think it's so complex, you don't even know where to start.

But we do know where to start. This issue of distinguishing whether content is made by a human or made by a machine actually has a very straightforward solution. Content made by AI should be clearly labeled as such so that people know what they're looking at. That's exactly what the bipartisan AI Labeling Act that Senator Kennedy and I introduced calls for.

It puts the onus where it belongs – on the companies and not the consumers. Very straightforward, because people shouldn't have to double and triple check or parse through thick lines of code to find out whether something was made by AI. It should be right there, in the open, clearly marked with a label.

Labels will help people to be informed. They'll also help companies using AI build trust in their content. We have a crisis of trust in our information sources, in large part due to polarization and misinformation. But if the current situation seems bad, without guardrails, the coming onslaught of AI-generated content will make the problem much, much worse. Misinformation will multiply, scams will skyrocket. Labels are an important antidote to these problems in the age of AI.

Whether we're ready or not, A.I. is here and in the not-too-distant future, it will reshape virtually every facet of our lives: how we work, how our kids learn in school, how we get health care, to name a few. So to take action or worse, to do nothing at all, is not a good option. We've seen that movie before with foreign interference in our elections; with medical misinformation that claimed so many lives; with data breaches that left Americans exposed and vulnerable.

This moment requires us to get serious about legislating proactively, not belatedly reacting to the latest innovation. Yes, Congress has a lot more to learn about AI, both its opportunities and threats. And yes, there's no simple answer or single solution for a very, very complex challenge and set of opportunities. But there's one thing we know to be true right now: people deserve to know if the content they're encountering was made by a human or not. This isn't a radical new idea; it is common sense.

There's a long road ahead for regulating A.I. and in the policymaking space. But that should not prevent us from doing this good and sensible thing as soon as we can.

###